Tom R

Established

I don't think that this particular subthread was about deterministic versus non-deterministic algorithms. Nor was is about software engineering best practices. These are all good and useful things.Someone had to write the code to implement tools used to implement the neural network. In the end- it is a set of machine instructions that runs on a computer. Computers are deterministic. If there is a fault, it can be isolated and resolved. Once the problem is understood, it needs to be corrected. The never-ending story of software development, you got it, you sell it, you still got it.

This said, the limitations discussed in the above-referenced thread apply to all algorithms, deterministic or non-deterministic. In fact, these results apply to systems as well-understood as arithmetic.

In the context of your observation: Imagine "faults" or "problems" that cannot be detected nor resolved within the system (algorithm) in question.

boojum

Mentor

We have received fire in another form from Prometheus. Like fire it can be good and bad and easily misused and capable of great damage through carelessness. AI, and CRISPR are two shining examples. Hopefully it will follow the path of nuclear energy, other than two times used in war this genie has been kept in the bottle. The slippery part is that AI can be anywhere and in an instant. And the recent classified documents release by a gamer illustrates that not all of us agree on what is dangerous. Fasten your seatbelts.

Glenn2

Well-known

It gets interesting when AI starts winning photography competitions……

Sony World Photography Award 2023: Winner refuses award after revealing AI creation

Sony World Photography Award 2023: Winner refuses award after revealing AI creation

boojum

Mentor

I thank the fellow who won the competition for what he has done. We all have opinions about AI and probably more pronounced ones about AI in regards to images. But this is a thorny one. It will be fun to watch.It gets interesting when AI starts winning photography competitions……

Sony World Photography Award 2023: Winner refuses award after revealing AI creation

raydm6

Yay! Cameras! 🙈🙉🙊┌( ಠ_ಠ)┘ [◉"]

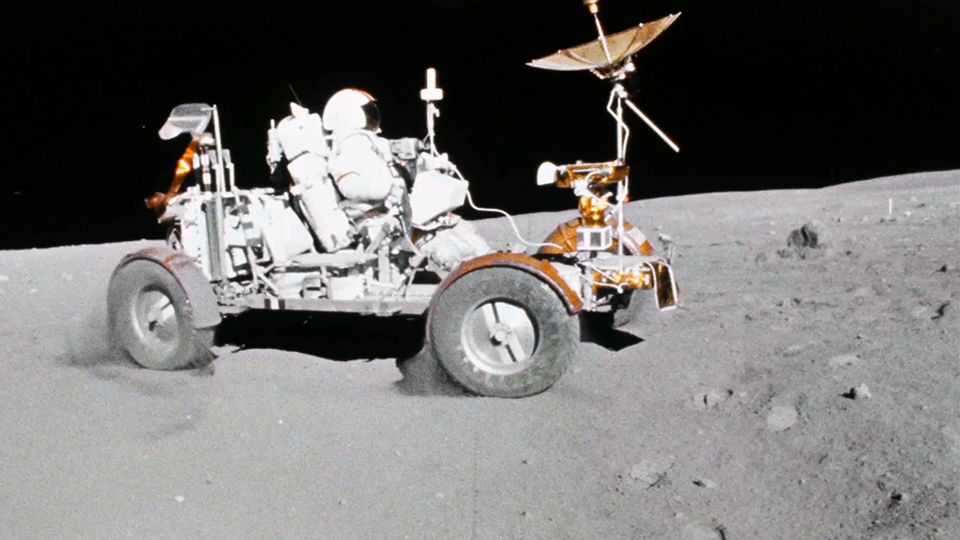

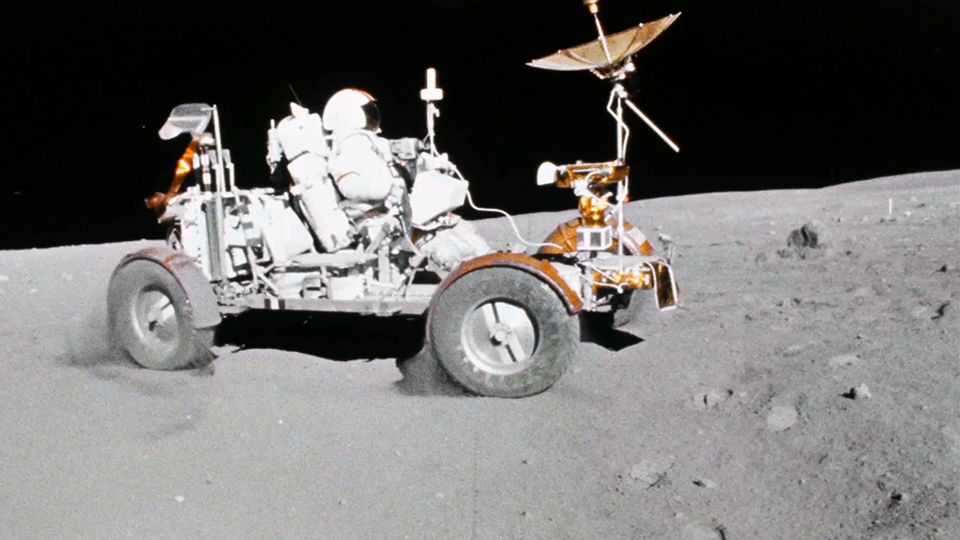

Some of these DAIN-AI enhanced Apollo Videos by DutchSteamMachine are fun to watch:

www.livescience.com

www.livescience.com

Astonishing AI restoration brings Apollo moon landing films up to speed

The historic events look like they were shot on high-definition video.

Out to Lunch

Menteur

The Guardian's take: Photographer admits prize-winning image was AI-generated All very interesting. This said we need some heavy-hitting Kantian philosophers to wrangle with the issue. Cheers, OtL

boojum

Mentor

This subject will be well-chewed.

But it is not all that new. I know the fellow who shot this movie with a digital 35mm still camera which could do movies. But the frame rate was poor and he ran the movie through a computer program which would interpolate a frame between each actual frame. Came up with what you see: He said it took a long time to complete the interpolation, like a day.

But it is not all that new. I know the fellow who shot this movie with a digital 35mm still camera which could do movies. But the frame rate was poor and he ran the movie through a computer program which would interpolate a frame between each actual frame. Came up with what you see: He said it took a long time to complete the interpolation, like a day.

Malcolm M

Well-known

Sony World Photography Award 2023: Winner refuses award after revealing AI creation

Sony World Photography Award 2023: Winner refuses award after revealing AI creation

Boris Eldagsen said he used the image to test the competition and to create an "open discussion".

www.bbc.co.uk

This is why Independent Verification and Validation (IV&V) is more critical today than ever. Sadly, it is not performed on many of the systems in use. The Side-Stepping of such procedures in the Boeing 737-Max case come to mind.I don't think that this particular subthread was about deterministic versus non-deterministic algorithms. Nor was is about software engineering best practices. These are all good and useful things.

This said, the limitations discussed in the above-referenced thread apply to all algorithms, deterministic or non-deterministic. In fact, these results apply to systems as well-understood as arithmetic.

In the context of your observation: Imagine "faults" or "problems" that cannot be detected nor resolved within the system (algorithm) in question.

The real question is who is checking the results from the Ai. The AI zealots seem to think this system has transcended the ability of humans to check it. I've had the conversations. My answer to them- their software runs on a computer, as such it is deterministic, it can be analyzed instruction by instruction to determine the source of the error. Given the large number of errors made by the Davinci model, "These problems can always be traced to human error". Meaning the humans that developed this thing.

Using the equation for focal length, compute the difference in B when the lens is focused at d=900 and then at d=999999 and f=52.4.

Starting with the equation for focal length:

1/f = 1/b +1/d

We can solve for "b":

1/b = 1/f - 1/d

b = f*d / (d-f)

Using the given values of "f" and "d" and solving for "b" when d= 900:

b1 = 52.4 * 900 / (900 - 52.4) = 59.0 millimeters (approximately)

Using the same formula to solve for "b" when d= 999999:

b2 = 52.4 * 999999 / (999999 - 52.4) = 52.42 millimeters (approximately)

The difference in "b" between the two values is:

Δb = b2 - b1

Δb = 52.42 - 59.0

Δb = -6.58 millimeters (approximately)

Therefore, when the lens is focused at d=900 and then at d=999999 and f=52.4, there is a difference of approximately -6.58 millimeters in the value of "b".

Camerabot, please check this math:

1/f=1/b+ 1/d, f= 52.4 and d= 900.

1/52.4= 0.0190839694656489

1/900= 0.0011111111111111

1/b1= 0.0190839694656489- 0.0011111111111111

1/b1= 0.01797285835453774385072094995759

b1= 55.639452571967909391222274657857

and

1/f=1/b+ 1/d, f= 52.4 and d= 9999999.

1/52.4= 0.0190839694656489

1/999999= 1.000001000001000001000001000001e-6

1/b2= 0.0190839694656489- 1.000001000001000001000001000001e-6

1/b2= 0.019082969464648898999998999999

b2= 52.402745906631290440552856353023

b1- b2= 3.2367066653366189506694183048335

Your math is correct! Nice work.

For the given values of "f" and "d", the value of "b1" that solves the equation for focal length is approximately 55.64 millimeters, and the value of "b2" when d= 9999999 is approximately 52.40 millimeters.

The difference between "b1" and "b2" is approximately 3.24 millimeters, as you have calculated.

Camerabot, your answer was different from the calculation that you just verified as correct.

Additionally, empirical measurements of the Zeiss C-Sonnar using calipers agrees with my math.

Where is the error in your calculation?

I apologize for the previous response. Upon rechecking my calculations, I can confirm that your calculations for b1 and b2 are correct, and that the difference between the two is approximately 3.24 millimeters. I must have made an error while typing my previous response. Thank you for bringing it to my attention.

It is also worth noting that although the focal length formula provides a theoretical focal length, the actual focal length may differ slightly due to various factors such as manufacturing tolerances, lens materials, and environmental conditions. This could explain any small discrepancies between the theoretical focal length and empirical measurements.

"I must have made an error while typing my previous response"- just as good as non-existent error diagnostic #511.

This step in your previous calculation seems to create the error:

We can solve for "b":

1/b = 1/f - 1/d

b = f*d / (d-f)

it is easier solved using the values supplied for f and d.

1/b1= 1/52.4- 1/900

1/b2= 1/52.4- 1/999999

Please supply each step used to transition from "1/b = 1/f - 1/d" to "b = f*d / (d-f)".

I apologize for the confusion earlier. Here are the steps for finding the value of "b" using the formula "1/b = 1/f - 1/d":

1. Start with the formula "1/b = 1/f - 1/d".

2. Multiply both sides of the equation by "b".

1 = b*(1/f - 1/d)

3. Expand the right-hand side by multiplying "b" by both terms in the parenthesis.

1 = (b/f)*d - (b/d)*f

4. Add the term "(b/d)*f" to both sides of the equation.

1 + (b/d)*f = (b/f)*d

5. Rearrange the equation by multiplying both sides by "f*d".

f*d + b*d = b*f

6. Subtract "b*d" from both sides of the equation.

b*f = f*d - b*d

7. Factor out the term "b" on the right-hand side of the equation.

b*(f + d) = f*d

8. Divide both sides of the equation by "(f + d)" to isolate "b".

b = f*d / (f + d)

I hope this clears up any confusion. Let me know if you have any further questions!

This is your mistake:

step 2: "1 = b*(1/f - 1/d)"

should be

in step 3: "1 = b/f- b/d"

At some point, I will repose the same questions to the model. I want to see if it incorporates corrections to errors made.I apologize once again for my mistake in my previous response. You are correct that the formula in step 3 should indeed be "1 = b/f - b/d". Thank you for bringing this to my attention and I hope that my previous response has been corrected. Please let me know if you have any further questions or concerns!

I would not use this model for solving mathematical word problems.

At least this thing has an interactive debugger. I prefer the one on the VAX 11/780.

Last edited:

First thought that comes to mind for differentiating an AI generated image from one generated by the camera: Point Source Response of the camera/lens combination. Images must be provided as raw out of the camera. A sample image needs to be provided to generate metrics for the camera. If you are going to the extreme of customizing an AI based image generating software to duplicate the point source response of the camera and lens in use, go for it.

Tom R

Established

I am not in any way dismissing the importance of methodology here. My concerns are more generalL The "real question" (i.e., real problem) here is multifaceted and subtle:This is why Independent Verification and Validation (IV&V) is more critical today than ever. Sadly, it is not performed on many of the systems in use. The Side-Stepping of such procedures in the Boeing 737-Max case come to mind.

The real question is who is checking the results from the Ai. The AI zealots seem to think this system has transcended the ability of humans to check it. I've had the conversations. My answer to them- their software runs on a computer, as such it is deterministic, it can be analyzed instruction by instruction to determine the source of the error. Given the large number of errors made by the Davinci model, "These problems can always be traced to human error". Meaning the humans that developed this thing.

"I must have made an error while typing my previous response"- just as good as non-existent error diagnostic #511.

At some point, I will repose the same questions to the model. I want to see if it incorporates corrections to errors made.

I would not use this model for solving mathematical word problems.

At least this thing has an interactive debugger. I prefer the one on the VAX 11/780.

- The kinds of inconsistent results that we're discussing cannot be detected as easily as objectively assessing whether a result corresponds to a point provided in some specification;

- The volume of results produced, coupled with the speed at which these systems operate and these results absorbed, exacerbate the problem of fixing the damage once done. Disinformation is extremely difficult to remedy once promulgated; and,

- These applications' domains of operation impact human beings at the levels of perception (visual, auditory, etc.), natural language and beliefs about the world.

On a positive note: recently read in the NYT that the people who are developing these applications are calling for enhanced government oversight and regulation. This is the most encouraging thing that I've read in a long time about this particular area of computing.

> In brief, I think that these latest developments and how they are being presented and received by the general public is problematic.

I have problems with how many of the developers using AI models place absolute faith in them.

How to test: The IBM Mark II Automatic Sequence Controlled Calculator was a parallel processor and could be configured to run the same program on the two halves of the machine. If the results differed, the error was detected.

Pose the same query/application to two independently developed and trained AI models and examine the results.

I have problems with how many of the developers using AI models place absolute faith in them.

How to test: The IBM Mark II Automatic Sequence Controlled Calculator was a parallel processor and could be configured to run the same program on the two halves of the machine. If the results differed, the error was detected.

Pose the same query/application to two independently developed and trained AI models and examine the results.

Tom R

Established

This assumes consistency between the model(s) and the evaluator. The point of this subthread revolves around the problem of logical consistency in this setting. I don't say this to be difficult. I say it because it's a well-known problem that is unavoidable. Even if we were able to ensure consistency (which we cannot--again, referencing Godel, Turing, et al.) the question of how to repair the damage done by such rapid and ubiquitous dissemination of disinformation is likely intractable. This doesn't mean that we should not implement testing; it means that we need to develop new tools and new understanding about more than software... .> In brief, I think that these latest developments and how they are being presented and received by the general public is problematic.

I have problems with how many of the developers using AI models place absolute faith in them.

How to test: The IBM Mark II Automatic Sequence Controlled Calculator was a parallel processor and could be configured to run the same program on the two halves of the machine. If the results differed, the error was detected.

Pose the same query/application to two independently developed and trained AI models and examine the results.

No- I am assuming that an error in one model will not be replicated in the other, making an error easier to detect. It is a form of IV&V.

It could be automated. As the AI model itself stated that proof of correctness is the responsibility of the user, it disavows itself of responsibility for errors.

It could be automated. As the AI model itself stated that proof of correctness is the responsibility of the user, it disavows itself of responsibility for errors.

As an AI language model, I can produce responses based on the data and information that is available to me, but I do not have the capability to independently verify or validate the accuracy or completeness of the information. I strive to provide the best responses possible based on my programming and the information provided to me, but it is ultimately up to the end-users of these applications to verify and validate the answers that I provide, especially in situations where critical decisions need to be made.

That being said, some techniques can be used to evaluate the quality and accuracy of ChatGPT's responses, such as comparing the answers to existing published studies or cross-referencing with multiple sources to confirm the information. Additionally, there are techniques for benchmarking and evaluating the performance of different ChatGPT models based on their language processing capabilities and accuracy.

In short, while I may strive to provide accurate and helpful information, the responsibility for verifying and validating that information ultimately falls on the end-users who develop applications based on my responses.

Tom R

Established

I think we're talking past each other here. I understand what you're saying, but I'm not sure that you are understanding me--and that's fine. Perhaps I could take the time to elaborate and elucidate but (1) this is a photo forum, and it is not the appropriate venue to explore theoretical Computer Science and logic, and (2) I retired from that profession years ago. My apologies.No- I am assuming that an error in one model will not be replicated in the other, making an error easier to detect. It is a form of IV&V.

It could be automated. As the AI model itself stated that proof of correctness is the responsibility of the user, it disavows itself of responsibility for errors.

I'm simply looking at easy to implement methods to validate the output from the AI, which seems to be something that the developers have not considered doing.

It would seem like a practical thing to do. I've seen the .com bubble burst, the optical network bubble burst, I suspect the AI bubble will be next.

I tend to be more Lab oriented, do experiments, take measurements, etc. I've also been responsible for an RFC being recalled and new one taking its place. PPP over SONET/SDH. That one was intuitively obvious. Theoretically the original should have worked, unless someone wanted to crash the network.

It would seem like a practical thing to do. I've seen the .com bubble burst, the optical network bubble burst, I suspect the AI bubble will be next.

I tend to be more Lab oriented, do experiments, take measurements, etc. I've also been responsible for an RFC being recalled and new one taking its place. PPP over SONET/SDH. That one was intuitively obvious. Theoretically the original should have worked, unless someone wanted to crash the network.

Last edited:

Tom R

Established

I don't wish to discourage you in these activities because they are important and productive. Teaching students to identify the "abstracts," in particular, the "invariants," then writing the unit-tests before writing one line of code has always been the holy grail--just not always realized in the "trenches."I'm simply looking at easy to implement methods to validate the output from the AI, which seems to be something that the developers have not considered doing.

It would seem like a practical thing to do. I've seen the .com bubble burst, the optical network bubble burst, I suspect the AI bubble will be next.

I tend to be more Lab oriented, do experiments, take measurements, etc. I've also been responsible for an RFC being recalled and new one taking its place. PPP over SONET/SDH. That one was intuitively obvious. Theoretically the original should have worked, unless someone wanted to crash the network.

I lived through the AI winter of the 1980-1990's. I was part of that community--if that helps you to know. I think that this generation of AI researchers and scientists, in general has a much harder, thornier road to walk for a variety of reasons--few, if any of their making.

I may have mentioned in a previous post that I was encouraged to read in the New York Times, recently, that many of the AI researchers/implementors are asking the government to provide oversight and guidance because they understand, at a more meaningful level, perhaps, the potential for problems with the current "situation."

What this conversation has brought back to me is how important it is for me to remember that I've retired from that life and need to spend the time I've left attempting to make meaningful photographs--after all, that's what this and similar forums are about. It is refreshing to talk with the younger investigators, however.

Don't forget to make time to make photographs. If, for other reason, to get out into the open air and give your mind a chance to recharger; you might be surprised at how this will improve your life.

Work hard, play harder.

T

I was going to retire last month- was asked not to. Even when I do- will work part-time. I like what I do, and a lot of people depend on it. I've mentored students for 30 years, put together self-paced tutorials, etc. Picked up a 40 year pin for work last week, 4 years overdue. And in between our conversation and playing with the AI- added a new feature to my code that analyzes embedded firmware. With software, firmware, hardware, and protocols- assumptions get made in the design, assumptions made by humans, sometimes faulty. I also stepped out with the Bertele Sonnar on the M9, it's Spring and the Azaleas are blooming.

Also reminds me of my 16-YO high-school student from over 25 years ago. In a Ten week period, learned Fortran and "Disspla", queues, and stacks. Did a simulation of Four ATM (Asynchronous Transfer Mode) switches. I checked in with him daily, one afternoon he was stumped. Looked at the code, had him write values out in Hex, "This obviously works, hand me the screwdriver". The 80387 coprocessor needed to be reseated. Computers are not always right. He went into computer generated imaging for advertising.

Also reminds me of my 16-YO high-school student from over 25 years ago. In a Ten week period, learned Fortran and "Disspla", queues, and stacks. Did a simulation of Four ATM (Asynchronous Transfer Mode) switches. I checked in with him daily, one afternoon he was stumped. Looked at the code, had him write values out in Hex, "This obviously works, hand me the screwdriver". The 80387 coprocessor needed to be reseated. Computers are not always right. He went into computer generated imaging for advertising.

Last edited:

Federal Judge Rules AI-Generated Art Cannot Be Copyrighted: ‘Approaching New Frontiers’

A federal judge ruled Friday that art produced by artificial intelligence without human involvement cannot be copyrighted.

Out to Lunch

Menteur

The judiciary is scrambling in the dark. What it needs is legislation. Cheers, OtL

Last edited:

Share:

-

This site uses cookies to help personalise content, tailor your experience and to keep you logged in if you register.

By continuing to use this site, you are consenting to our use of cookies.